Random Forest Classifier

Random Forest Classifier technique is tested here to see their accuracy in terms of output.

Python program:

>>> import numpy as np

>>> import matplotlib.pyplot as plt

>>> from matplotlib.colors import ListedColormap

>>> from sklearn import ensemble, datasets

>>> iris = datasets.load_iris()

>>> x = iris.data[:, :2]

>>> y = iris.target

>>> h = .02

>>> cmap_bold = ListedColormap(['firebrick', 'lawngreen', 'b'])

>>> cmap_light = ListedColormap(['pink', 'palegreen', 'lightcyan'])

//Plotting the analysis//

Random Forest Classifier technique is tested here to see their accuracy in terms of output.

Python program:

>>> import numpy as np

>>> import matplotlib.pyplot as plt

>>> from matplotlib.colors import ListedColormap

>>> from sklearn import ensemble, datasets

>>> iris = datasets.load_iris()

>>> x = iris.data[:, :2]

>>> y = iris.target

>>> h = .02

>>> cmap_bold = ListedColormap(['firebrick', 'lawngreen', 'b'])

>>> cmap_light = ListedColormap(['pink', 'palegreen', 'lightcyan'])

//Plotting the analysis//

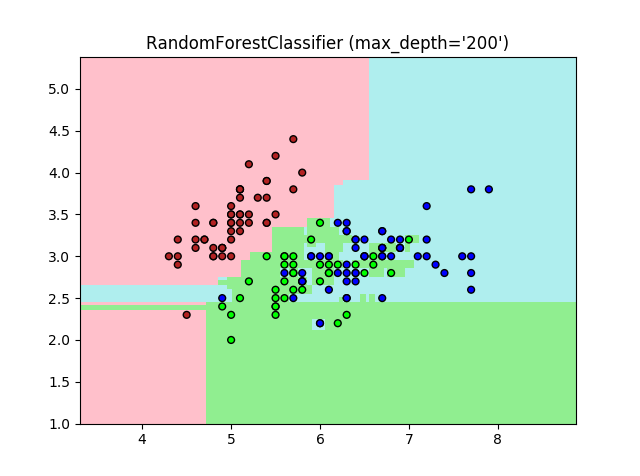

a) Effect of maximum depth(max_depth):

>>> for max_depth in [20, 50, 200, 500]:

... clf = ensemble.RandomForestClassifier(max_depth=max_depth)

... clf.fit(x, y)

... x_min, x_max = x[:, 0].min() -1, x[:, 0].max() +1

... y_min, y_max = x[:, 1].min() -1, x[:, 1].max() +1

... xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

... z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

... z = z.reshape(xx.shape)

... plt.figure()

... plt.pcolormesh(xx, yy, z, cmap=cmap_light)

... plt.scatter(x[:, 0], x[:, 1], c=y, cmap=cmap_bold, edgecolor='k', s=24)

... plt.xlim(xx.min(), xx.max())

... plt.ylim(yy.min(), yy.max())

... plt.title("RandomForestClassifier (max_depth='%s')" %(max_depth))

...

Increased max_depth value increases accuracy.

b) Effect of maximum leaf nodes (max_leaf_nodes):

>>> for max_leaf_nodes in [2, 5, 10, 25, 50, 125, 250, 500, 1250, 2500, 5000, None]:

... clf = ensemble.RandomForestClassifier(max_leaf_nodes=max_leaf_nodes)

... clf.fit(x, y)

... x_min, x_max = x[:, 0].min() -1, x[:, 0].max() +1

... y_min, y_max = x[:, 1].min() -1, x[:, 1].max() +1

... xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

... z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

... z = z.reshape(xx.shape)

... plt.figure()

... plt.pcolormesh(xx, yy, z, cmap=cmap_light)

... plt.scatter(x[:, 0], x[:, 1], c=y, cmap=cmap_bold, edgecolor='k', s=24)

... plt.xlim(xx.min(), xx.max())

... plt.ylim(yy.min(), yy.max())

... plt.title("RandomForestClassifier (max_leaf_nodes='%s')" %(max_leaf_nodes))

...

Increased max_leaf_nodes increase output accuracy.

c) Effect of minimum samples at leaf of nodes (min_sample_leaf):

>>> for max_leaf_nodes in [1, 2, 3, 4, 5, 6, 7, 8]:

... clf = ensemble.RandomForestClassifier(min_sample_leaf=min_sample_leafs)

... clf.fit(x, y)

... x_min, x_max = x[:, 0].min() -1, x[:, 0].max() +1

... y_min, y_max = x[:, 1].min() -1, x[:, 1].max() +1

... xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

... z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

... z = z.reshape(xx.shape)

... plt.figure()

... plt.pcolormesh(xx, yy, z, cmap=cmap_light)

... plt.scatter(x[:, 0], x[:, 1], c=y, cmap=cmap_bold, edgecolor='k', s=24)

... plt.xlim(xx.min(), xx.max())

... plt.ylim(yy.min(), yy.max())

... plt.title("RandomForestClassifier (min_sample_leaf='%s')" %(min_sample_leaf))

...

Increased max_leaf_nodes decrease output accuracy.

d) Effect of number of estimators (n_estimators):

>>> for n_estimators in [1, 2, 5, 10, 25, 50, 125, 250, 500, 1250, 2500]:

... clf = ensemble.RandomForestClassifier(n_estimators=n_estimators)

... clf.fit(x, y)

... x_min, x_max = x[:, 0].min() -1, x[:, 0].max() +1

... y_min, y_max = x[:, 1].min() -1, x[:, 1].max() +1

... xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

... z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

... z = z.reshape(xx.shape)

... plt.figure()

... plt.pcolormesh(xx, yy, z, cmap=cmap_light)

... plt.scatter(x[:, 0], x[:, 1], c=y, cmap=cmap_bold, edgecolor='k', s=24)

... plt.xlim(xx.min(), xx.max())

... plt.ylim(yy.min(), yy.max())

... plt.title("RandomForestClassifier (n_estimators='%s')" %(n_estimators))

...

Increased n_estimators decrease output accuracy.

e) Effect of number of estimators (n_jobs) on output:

>>> for n_jobs in [-1, 2, 5, 10, 25, 50, 125, 250, 500, 1250, 2500]:

... clf = ensemble.RandomForestClassifier(n_jobs=n_jobs)

... clf.fit(x, y)

... x_min, x_max = x[:, 0].min() -1, x[:, 0].max() +1

... y_min, y_max = x[:, 1].min() -1, x[:, 1].max() +1

... xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

... z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

... z = z.reshape(xx.shape)

... plt.figure()

... plt.pcolormesh(xx, yy, z, cmap=cmap_light)

... plt.scatter(x[:, 0], x[:, 1], c=y, cmap=cmap_bold, edgecolor='k', s=24)

... plt.xlim(xx.min(), xx.max())

... plt.ylim(yy.min(), yy.max())

... plt.title("RandomForestClassifier (n_jobs='%s')" %(n_jobs))

...

Increase in n_jobs value is inconclusive.

f) Effect of number of estimators (random_state) on output:

>>> for n_jobs in [1, 2, 5, 20, 50, 100, 200, 500]:

... clf = ensemble.RandomForestClassifier(random_state=random_state)

... clf.fit(x, y)

... x_min, x_max = x[:, 0].min() -1, x[:, 0].max() +1

... y_min, y_max = x[:, 1].min() -1, x[:, 1].max() +1

... xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

... z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

... z = z.reshape(xx.shape)

... plt.figure()

... plt.pcolormesh(xx, yy, z, cmap=cmap_light)

... plt.scatter(x[:, 0], x[:, 1], c=y, cmap=cmap_bold, edgecolor='k', s=24)

... plt.xlim(xx.min(), xx.max())

... plt.ylim(yy.min(), yy.max())

... plt.title("RandomForestClassifier (random_state='%s')" %(random_state))

...

Increase in n_jobs value is inconclusive about effect on output accuracy.

No comments:

Post a Comment